Google’s biggest leak to date was two weeks ago.

I was one of the first to cover it and made this 34-minute podcast on it. My most popular podcast yet.

One of the most interesting things about the leak is how it confirms SEO experiments that Google reps previously called “made-up crap.”

The leak shows many ranking factors that Google still uses or used in the past – things they said they didn’t/couldn’t measure.

Now, it’s important to state that we don’t have the weights associated with the factors; however, there are so many experiments out there that show what is important and what is not.

So, in this article, I’ll share some things learned from SEO experiments over the years.

Click data

Rand Fishkin, who is primarily responsible for popularizing the leak, used to do many SEO experiments with his large audience.

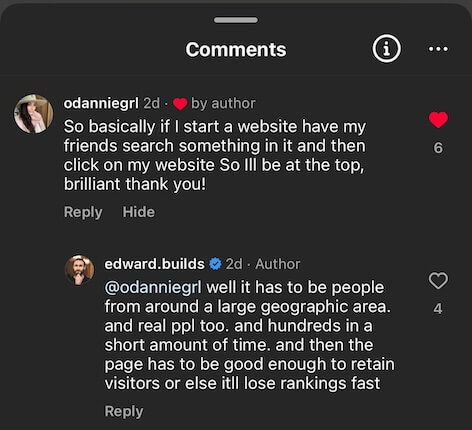

His experiments with click velocity are especially interesting.

In two separate experiments, he:

- Tweeted out instructions to his audience of hundreds of thousands.

- Told them to Google a specific keyword and click on a specific result at the bottom of Page 1 of Google.

- Got several hundred clicks in 3 hours.

- Experiment 1: 228 clicks.

- Experiment 2: 375 clicks.

- Had both pages go from the bottom of Page 1 of Google to the #1 spot as a result.

He then repeated this on other separate occasions. More people tested this too. Everybody confirmed Rand Fishkin’s findings.

Then a Google rep said, “Dwell time, CTR, whatever Fishkin’s new theory is, those are generally made-up crap.”

Rand, naturally, took this personally. When he published his article about the “leaked Google Search API documents,” he said, “In their early years, Google’s search team recognized a need for full clickstream data (every URL visited by a browser) for a large percent of web users to improve their search engine’s result quality.” This “clickstream data served as the key motivation for the creation of the Chrome browser.”

Makes sense, though.

TikTok and Instagram recommend content based on engagement and user intent satisfaction. Why wouldn’t Google want to do the same?

This is interesting information, but it’s important to keep this in mind:

Content stealing

I made a podcast about this this morning at 7 AM. This was my studio for the podcast:

SEO Dan Petrovic tested copying others’ content and putting it on higher Domain Authority websites without permission.

In all 4 of his tests, the higher Domain Authority (what SEO Domain Authority is) sites ranked above the original content.

Crazy.

Then, the good news. Dan got a warning in his Google Search Console and had to take down the pages.

But that takes us to today, where this no longer matters.

People are now doing this on User-Generated Content websites where there are fewer bad consequences for getting caught.

AND Google is making it easy to do this:

Reddit is outranking you, even for your own content, because of THREE different factors, with the last being the one most people in SEO have NO idea about...

— Charles Floate 📈 (@Charles_SEO) March 12, 2024

1 - For the last ~9 months, Google has been on what feels like a god given mission to turbocharge UGC signals... You see… pic.twitter.com/lnY1CwGcfp

The TLDR of that tweet is that Google is boosting User-Generated Content websites like Reddit and doesn’t care where the content originated from.

It’s doing this because searchers trust User-Generated Content sites more than blogs. The clickthrough rate on Reddit (which the previous click tests show is important) is higher.

Okay, should you try this?

I’m sharing this because for savvy digital marketers or anyone learning digital marketing, this knowledge is important to have. It’s vital to understand how the internet works. This information is even significant in the age of ChatGPT and Gemini as LLMs internally rank data based on similar metrics Google uses to recommend websites.

Even social media algorithms like IG and TikTok mirror Google’s Search algorithm in various ways.

Understanding how content algorithms work and what they value is so, so important.

But I’m not going to advocate black hat tactics because they could easily backfire.

For example, you could try doing the Reddit stuff mentioned above, get caught, and have your brand and any mentions of it shadow-banned on Reddit. That’d be bad.

Google could do a deal with Reddit where they get IP addresses, Chrome data, and more data of users, and match that to webmasters and consultants on a Google Search Console property. Then black hat tactics done improperly could be tracked back not just to a company, but to the Google users and identities themselves.This would make it harder to do various businesses in the future on platforms that share this sort of data.

That’d be bad too.

So it’simportant to understand how these things work.

But it’s also important to understand that the consequences of being dumb are high.

For now, brands are doing this to skirt these consequences – basically going through 3rd parties without any trace of connection to the original brand.

But it’s still worth treading in this area with a healthy amount of paranoia about what’s possible and what the consequences could be.

As for me, I play the long game and don’t have to look over my shoulder.

So, my dear readers, I’ll leave you with this.

It’s another podcast I made that people seemed to really like. It’s about how to do SEO in a white hat way after what has been learned from Google Leak.